March 13, 2022

Updated on Dec 15, 2025

Delivering Vercel-Style Developer Experience with Kubernetes and GitLab, part IV

Kubernetes manifests

This article is the fourth part of the Delivering Vercel-Style Developer Experience with Kubernetes and GitLab series.

Delivering Vercel-Style Developer Experience with Kubernetes and GitLab

- Introduction: Why build your own developer platform?

- Part I: Cluster setup

- Part II: GitLab pipeline and CI/CD configuration

- Part III: Applications and the Dockerfile

- Part IV: Kubernetes manifests

In previous parts I've set up a Kubernetes cluster, configured GitLab repositories, pipeline stages, and then Dockerfiles.

Now, I'm going to create three manifests to describe resources I want to add to the Kubernetes cluster: an Ingress rule, a Service and a Deployment.

- Introduction

- Add an Ingress rule to route requests to a Service

- Add a Service to forward requests to Pods

- Pod forwards request to container

TL;DR

All Kubernetes manifests are available here if you wish to skip the detailed explanation:

Introduction

Previously, I've modeled how traffic flows from client to application with the following diagram, in this part I'm setting up components 4, 5 and 6:

✓ 1.client DNS, 443/TCP - part I↓✓ 2.host k0s installed - part I↓✓ 3.ingress ingress-nginx installed - part I↓4.service↓5.pod↓6.container↓✓ 7.application Dockerfile - part III

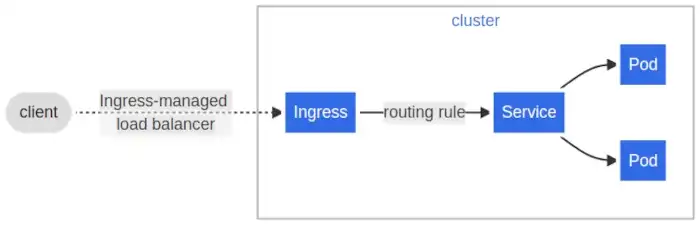

The Kubernetes documentation illustrates this flow in a similar way, and these components sit in the grey rectangle with the cluster label in the following picture:

Traffic flow according to Kubernetes documentation

For that purpose, I've created three Kubernetes manifests. They are identical throughout the Node.js, PHP, Python and Ruby repositories so file excerpts hold true for any application example.

To keep things simple, I'm not using any template engine or tool such as Kustomize, Helm, Jsonnet or ytt.

Instead, I'm doing a simple find and replace with __TOKEN__ strings in manifests files,

I've explained this task in part II.

1. Add an Ingress rule to route requests to a Service

An Ingress is not a component in itself, in the sense that it doesn't translates to a pod deployed to the cluster, with its own IP address on the cluster's internal network.

It's a routing rule that instructs the cluster's ingress controller to forward incoming connections to a certain Service according to a rule. Next section will explain what a Service is.

In my case, since I've installed ingress-nginx, this new rule will tell the

ingress-nginx-162... pod I was talking about in part I

to forward traffic received from the host to the Service I'll define in the

next section.

So, to define such a rule I create an Ingress

resource with the ingress.yml

file that defines a rule to route a specific URL to a specific service:

apiVersion: networking.k8s.io/v1kind: Ingress---spec:ingressClassName: nginx # when this ingress-controller receives requestsrules:- host: __KUBE_INGRESS_HOST__ # route requests to this domain namehttp:paths:- path: / # regardless of the requested pathbackend:service:name: api # to this service (in the same namespace)port:number: 3000 # using this TCP port

Watching traffic flows

On the other hand, the ingress-nginx controller translates to a pod with its own

IP address, running nginx:

$ kubectl describe pod -A -l app.kubernetes.io/name=ingress-nginxName: ingress-nginx-1644337728-controller-5cdf6b55b9-55qj4...Status: RunningIP: 172.31.12.80Containers:controller:Ports: 80/TCP, 443/TCP, 8443/TCPHost Ports: 80/TCP, 443/TCP, 8443/TCP

A simple way to see traffic being forwarded from this pod to a service is to show this pod's

log stream while querying a deployed application. Here is the pod's output while running

a curl command from another terminal:

# run this command in one terminal:$ kubectl logs -f $(kubectl get pod -A \-l app.kubernetes.io/name=ingress-nginx -o name)---# run this command in a second terminal:$ curl https://7c77eb36.nodejs.k0s.jxprtn.dev---# then the first terminal shows this new line:192.123.213.23 - - [07/Mar/2022:20:00:10 +0000] "GET / HTTP/2.0" 200 15 "-" "curl/7.68.0" 34 0.001 [nodejs-7c77eb36-api-3000] [] 10.244.0.24:3000 25 0.004 200 ee266baf44f3cfd0838e28fa1ff79785

This log line shows the curl/7.68.0 client request to be forwarded to nodejs-7c77eb36-api-3000.

Since the URL I'm requesting points to

the api service, on port 3000 and deployed in the nodejs-7c77eb36 namespace,

which does exist:

$ kubectl get service api -n nodejs-7c77eb36 \-o custom-columns=NAMESPACE:metadata.namespace,SERVICE:metadata.name,PORT:spec.ports[*].targetPortNAMESPACE SERVICE PORTnodejs-7c77eb36 api 3000

2. Add a Service to forward requests to Pods

On the other hand, a Service is an abstract component that has an IP address and acts as a load balancer in front of a pool of pods.

Because Pods can be created and deleted at will, the Service acts as a single immutable endpoint, meaning it has a fixed IP address. Then traffic is balanced between members of a Pod pool.

To enter the pool, pods must use a specific selector as it's declared in the application's

service.yml

manifest:

apiVersion: v1kind: Service---spec:type: ClusterIPports:- name: httpport: 80 # service listens to this porttargetPort: 3000 # and routes traffic to this pod's portprotocol: TCPselector:app: api # pods must use this value to enter the pool

3. Add a pod Deployment to forward requests to container

Pods are defined in the deployment.yml file:

apiVersion: apps/v1kind: Deploymentspec:replicas: 1 # how many pods I want to startselector:matchLabels:app: api # which service pods belongs to, must match spec.selector.app in service.yml

The credentials to pull the Docker image from the GitLab Container Registry:

spec:imagePullSecrets:- name: gitlab-registry

I need only one container running the image built in section 1:

containers:- name: nodeimage: __CI_REGISTRY_IMAGE__:__CI_COMMIT_SHORT_SHA__

I don't want to lock any host's resources for these pods. This is where I save a lot compared to other managed PaaS because I can wisely overcommit resources 1:

resources:requests:cpu: '0'memory: '0'

I don't want to allow a pod to eat all the host's resources so I limit how much a pod can use 2:

limits:cpu: '0.125'memory: 64M

And I expose the correct port:

ports:- containerPort: 3000

4. container receives traffic and serves the application

Before proceeding, I want to point out that I'm using the same value api for:

spec.rules[0].http.paths[0]backend.service.nameiningress.ymlspec.selector.appinservice.ymlselector.matchLabels.appindeployment

Also, note that:

- In

service.ymlI'm using a selector namedappwith the valueapi - In

deployment.ymlI'm using the sameapplabel with the same valueapi

It is critical to use the port the application is listening to in deployment.yml configuration (containerPort) and therefore in the service.yml (targetPort) and ingress.yml configuration (service.port.number).

In every application, I'm using the COMMIT_SHORT_HASH variable (exists only in during pipeline runtime) to assign a value to the COMMIT environment variable (stored in the Docker image). Then, I can read this value from code:

Node.js: process.env.COMMIT

PHP: getenv('COMMIT')

Python: os.environ.get("COMMIT")

Ruby: ENV['RUBY_VERSION']

1 Be aware that if you want to overcommit resources, as the documentation notes: if a container specifies its own memory limit, but does not specify a memory request, Kubernetes automatically assigns a memory request that matches the limit. ↑

2 Remember that Kubernetes doesn't gently refuse access to more resource, it usually terminates(kills) hungry pods. A good reason not to run shell commands, such as migration or cron jobs, in running pods that serve web traffic. ↑