February 20, 2022

Updated on Dec 13, 2025

Delivering Vercel-Style Developer Experience with Kubernetes and GitLab, part I

Cluster setup

This article is the first part of the Delivering Vercel-Style Developer Experience with Kubernetes and GitLab series.

Delivering Vercel-Style Developer Experience with Kubernetes and GitLab

- Introduction: Why build your own developer platform?

- Part I: Cluster setup

- Part II: GitLab pipeline and CI/CD configuration

- Part III: Applications and the Dockerfile

- Part IV: Kubernetes manifests

As told in the introduction, this part is about building a cheap, easy to rebuild Kubernetes cluster to get the ball rolling. I'd like to test things on a bare setup before using managed clusters such as AKS, EKS, GKE, etc.

- Start a fresh server

- Configure DNS record

- Download k0s

- Generate k0s config file

- Add cert-manager and ingress-nginx

- Install and start k0s cluster

- Install Lens and connect to k0s cluster (optional)

- Additional resources

- An overview of how incoming traffic flows

- Next step

To run this setup I need a Linux system with at least 2 GB of RAM 1, and a little more than the default 8 GB of disk space to make sure logs won't fill up all the available space. This is definitely not ideal but my goal here is to build a cheap setup.

I also need a wildcard subdomain pointing to this server. I'm using *.k0s.jxprtn.dev.

My server setup is:

- 2GB RAM

- 2 vCPUs

- 16GB disk

- Amazon Linux 2023

1. Start a fresh server

k0s can run on any server from any cloud provider as long as it can run a Linux distribution that is running either a Systemd or OpenRC init system.

AWS is my go-to provider, but going with any other cloud service provider shouldn't be a problem.

All aws commands I'm running on my workstation can be executed from AWS CloudShell

or performed from the web Management Console.

First, I launch a t3a.small EC2 instance running Amazon Linux 2023.

I'm providing my SSH key with the --key-name flag, and I'm attaching

my EC2 instance to an existing public subnet and security group that allows TCP traffic on ports

22, 80, 443 and 6443:

aws ec2 run-instances \--region us-east-1--image-id ami-08d7aabbb50c2c24e \--count 1 \--instance-type t3a.small \--key-name k0s \--block-device-mappings \'DeviceName=/dev/sda1,Ebs={VolumeSize=16}' \--subnet-id sg-00cf310b \--security-group-ids sg-4327b00b \--associate-public-ip-address \--tag-specifications \'ResourceType=instance,Tags=[{Key=project,Value=k0sTest}]'

When I want to clean up everything and shutdown created instances, I use the

--tag-specifications provided above to select instances and terminate them:

# store running instances ids in a variableINSTANCES=`aws ec2 describe-instances \--query \Reservations[*].Instances[*].[InstanceId] \--filters \Name=tag:project,Values=k0sTest \Name=instance-state-name,Values=running \--output text`# delete instancesaws ec2 terminate-instances --instance-ids $INSTANCES

2. Configure DNS record to point a wildcard subdomain to the server

Since I'm using AWS Route53, I've made a script to speed up the operation.

I'm using it as follows to update the existing A record:

HOSTED_ZONE_ID=`aws route53 list-hosted-zones \--query HostedZones[*].[Id,Name] \--output text \| grep k0s.jxprtn.dev | awk '{ print $1}'`K0S_IP=`aws ec2 describe-instances \--query \Reservations[*].Instances[*].PublicIpAddress \--filters \Name=tag:project,Values=k0sTest \Name=instance-state-name,Values=running \--output text`curl -sSlf https://gist.githubusercontent.com/jxprtn/9051676d7b2747f080cd193198e18091/raw/1686b13e09431cd98baf027577d20da572b880df/updateRoute53.sh \| bash -s -- ${HOSTED_ZONE_ID} '\\052.k0s.jxprtn.dev.' ${K0S_IP}

Now, any subdomain ending with .k0s.jxprtn.dev, such as abcd1234.k0s.jxprtn.dev,

will be routed to my EC2 instance.

This way I don't have to add a new CNAME record each time I create.

2. Download k0s

The $K0S_IP variable has already been set in section 1 and contains the server's

IP address.

The private key I've attached to the server is in my Downloads folders and I use it to ssh to the server:

$ ssh -i ~/Downloads/k0s.pem ec2-user@$K0S_IP, #_~\_ ####_ Amazon Linux 2023~~ \_#####\~~ \###|~~ \#/ ___ https://aws.amazon.com/linux/amazon-linux-2023~~ V~' '->~~~ /~~._. _/_/ _/_/m/'...

Once I'm connected to the EC2 instance, I can download the k0s binary and create a cluster configuration file:

# download k0s binary file:curl -sSLf https://get.k0s.sh | sudo K0S_VERSION=v1.34.2+k0s.0 sh

4. Generate k0s config file

Before starting the k0s cluster, I'm creating a k0s.yaml config file:

# generate a default config file:sudo k0s config create > k0s.yaml# replace 127.0.0.1 with server's public ip# to grant access from the outsidePUBLIC_IP=`curl -s ifconfig.me`sed -i 's/^\( sans\:\)/\1\n - '$PUBLIC_IP'/g' k0s.yaml

The configuration file has been generated and I've replaced the EC2 instance's

private IP address with its public IP address with the sed command to expose

the Kubernetes API to the internet.

It's not a good practice, and in a real setup I'd prefer to use AWS VPN or my own OpenVPN setup to join the EC2 instance's network and query the Kubernetes API from the internal network.

5. Add ingress-nginx and cert-manager charts

Update: Ingress NGINX Retirement: What You Need to Know

In the k0s.yaml config file, I'm adding a reference to the ingress-nginx and cert-manager charts to enable their automatic deployment when the cluster starts up.

sed -i '/ helm:/r /dev/stdin' k0s.yaml << 'EOF'repositories:- name: ingress-nginxurl: https://kubernetes.github.io/ingress-nginx- name: jetstackurl: https://charts.jetstack.iocharts:- name: ingress-nginx-stackchartname: ingress-nginx/ingress-nginxversion: "4.14.1"values: |controller:hostNetwork: trueservice:type: NodePortnamespace: default- name: cert-manager-stackchartname: jetstack/cert-managerversion: "1.19.2"values: |crds:enabled: truenamespace: defaultEOF

Note that I'm setting hostNetwork to true to bind ingress to 80 and 443 host's

port. This configuration is not recommended

but it's an easy way to address the lack of a load balancer in front of the cluster.

In order to request SSL certificate, I'll need to create a ClusterIssuer resource. I'm just creating the Kubernetes manifest for now, and I'll leverage the Manifest Deployer feature of k0s to create the resource when the cluster is running:

# create the manifestcat > cluster-issuer.yaml << EOFapiVersion: cert-manager.io/v1kind: ClusterIssuermetadata:name: letsencryptnamespace: defaultspec:acme:email: n0reply@n0wh3r3.comserver: https://acme-v02.api.letsencrypt.org/directoryprivateKeySecretRef:name: letsencryptsolvers:- http01:ingress:class: nginxEOF

The email address I'm providing here will receive all the Let's Encrypt expiration notices. It can get annoying and for that reason I'm using a fake one.

6. Install and start k0s cluster

Now, I can install a single node cluster:

$ sudo k0s install controller --single -c k0s.yaml$ sudo k0s start# wait a few seconds then:$ sudo k0s statusVersion: v1.34.2+k0s.0Process ID: 3909Role: controllerWorkloads: trueSingleNode: trueKube-api probing successful: trueKube-api probing last error:# wait a minute then check if control-plane is up:$ sudo k0s kubectl get nodesNo resources found# not ready yet, wait and retry:$ sudo k0s kubectl get nodesNAME STATUS ROLES AGE VERSIONip-172-31-13-250 Ready control-plane 5s v1.34.2+k0s# let's see if the ingress-nginx and cert-manager charts# have been deployed successfully:$ $ sudo k0s kubectl get deploymentsNAME READY UP-TO-DATE AVAILABLE AGEcert-manager-stack 1/1 1 1 8m26scert-manager-stack-cainjector 1/1 1 1 8m26scert-manager-stack-webhook 1/1 1 1 8m26singress-nginx-stack-controller 1/1 1 1 7m39s

Then, I create a symlink in the /var/lib/k0s/manifests/cert_managerfolder,

pointing to the cluster-issuer.yamlfile I created earlier, which will be automatically

deployed by the k0s Manifest Deployer, instead of using kubectl:

# create symlinksudo mkdir -p /var/lib/k0s/manifests/cert_managersudo ln -s $(pwd)/cluster-issuer.yaml /var/lib/k0s/manifests/cert_manager/

Then, I check that the ClusterIssuer resource has been created:

# check that the resource has been deployed$ sudo k0s kubectl get clusterissuers.cert-manager.ioNAME READY AGEletsencrypt True 10s

7. (optional) Install Lens and connect to k0s cluster

Lens is a graphical UI for kubectl, it makes interacting with a cluster easier and debugging faster for me.

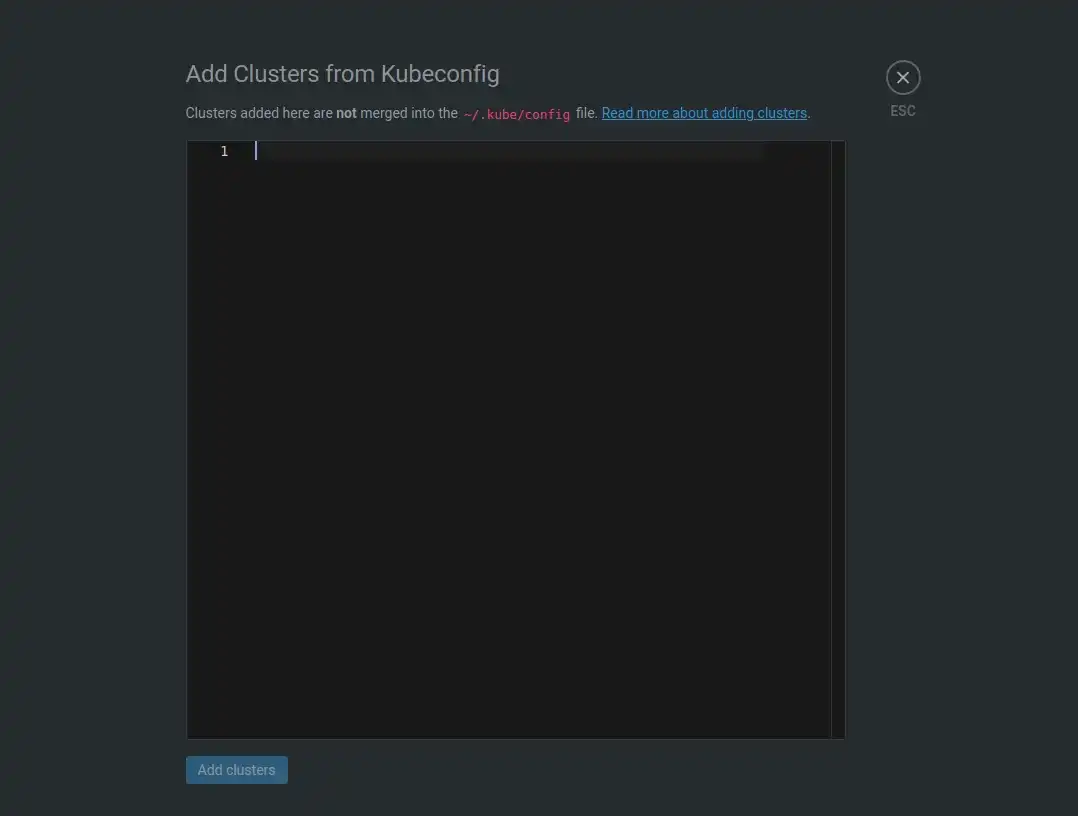

Once I've installed Lens, I skip the subscription process and add the cluster with the Add Kubeconfig from clipboard link. It shows an input field where I can paste a user configuration.

Adding a new cluster to Lens

To get these credentials I go back to the server, then copy the whole yaml output of this command, and paste it in Lens:

$ sudo k0s kubeconfig admin \| sed 's/'$(ip r \| grep default \| awk '{ print $9}')'/'$(curl -s ifconfig.me)'/g'apiVersion: v1clusters:- cluster:server: https://3.224.127.184:6443certificate-authority-data: LS0tLS1CRUdJTiBDRVJUS...name: localcontexts:- context:cluster: localnamespace: defaultuser: username: Defaultcurrent-context: Defaultkind: Configpreferences: {}users:- name: useruser:client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0...client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRV...

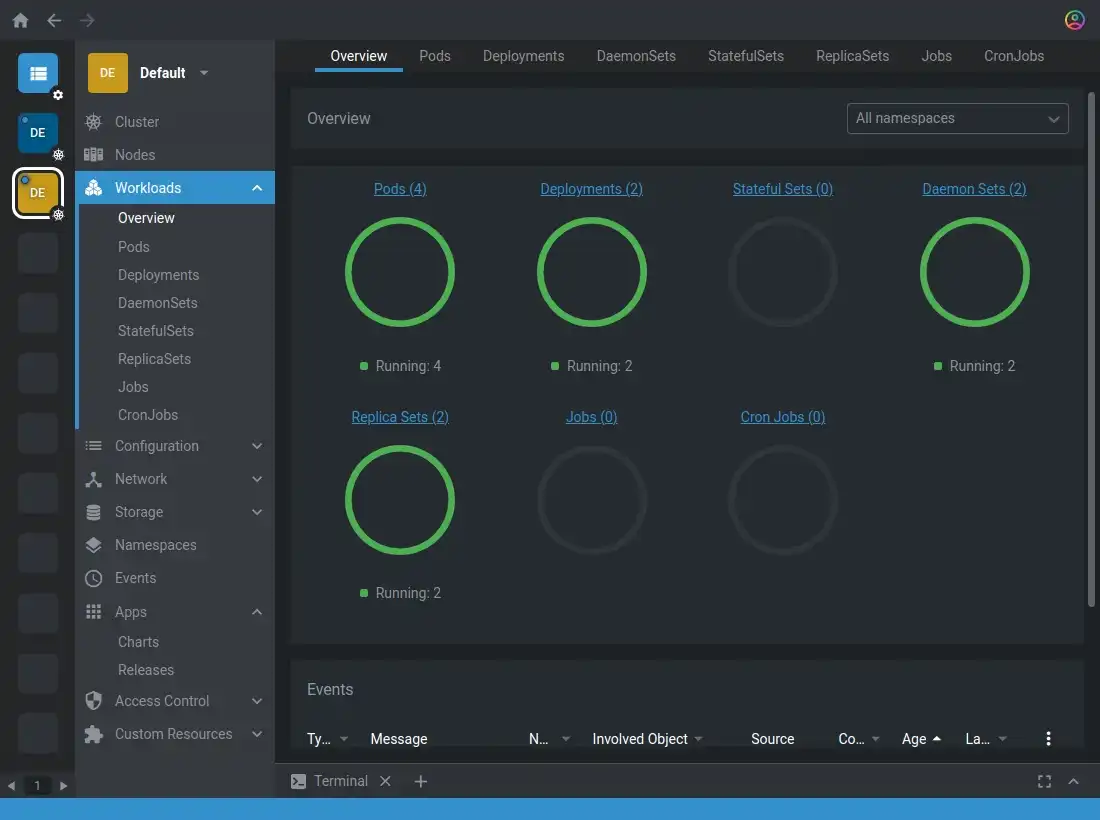

Now I'm able to connect to the cluster and show an overview of the workload in all namespaces:

Lens overview of the new cluster

8. Additional resources

- While going through the manual steps is valuable for understanding how everything works, I've created this script that automates the entire setup process:

curl -sSLf https://gist.githubusercontent.com/jxprtn/64efd45819bb22521d419c3ba4176ef6/raw/c4f582f81c1a12bf02c280ecf64936c7b66e44b9/setup-k0s.sh | sh

- I've also created this script to retrieve the correct VPC id, subnet id, AMI id, create or get the id of the security group, start an EC2 instance and setup k0s in a single command (using the script mentioned above).

9. An overview of how incoming traffic flows

Because I've set hostNetwork to true when installing ingress-nginx, it has

created the following Kubernetes endpoint:

$ sudo k0s kubectl -n default get endpointsNAME ENDPOINTS AGEingress-nginx-...-controller 172.31.12.80:443,172.31.12.80:80 2h

It allows the host's incoming HTTP and HTTPS traffic to be forwared to the cluster, and more specifically, to this pod (more on this in part IV):

$ kubectl get pod -A -l app.kubernetes.io/name=ingress-nginxNAMESPACE NAME READY STATUS RESTARTS AGEdefault ingress-nginx-164... 1/1 Running 0 1d

The diagram below shows how incoming traffic flows throught components. Now that I've configured the cluster, so far I've set up the first three steps:

✅ 1.client DNS ok and 443/TCP port open↓✅ 2.host k0s installed↓✅ 3.ingress ingress-nginx installed↓4.service↓5.pod↓6.container↓7.application

Next step

My cluster is now ready 2 to host applications. In the next parts, I'll show how to automate the deployment of any branch or any commit of a repository from GitLab CI/CD, generate a unique URL à la Vercel and promote any deployment to production.

1 I tried with a t2.micro with 1 GB of RAM which can be run for free as part of the AWS Free Tier offer and fits the minimal system requirements of k0s for a controller+worker node, but it ended up being pretty unstable. ↑

2 Ready for testing, there's a lot to say about this setup but it's not meant to be a permanent solution. ↑